The rapid growth of generative AI has redefined how enterprises handle customer engagement, automate processes, and extract value from data. Yet, as businesses rush to integrate large language models (LLMs) into their workflows, a critical question arises: where should these models be deployed?

Public LLM APIs like OpenAI or Anthropic offer agility, but they introduce significant challenges—data leakage, regulatory risks, and limited customization. For enterprises operating in regulated industries such as healthcare, BFSI, pharma, manufacturing, and defense, the trade-offs are too costly. Sensitive data can’t be sent across the public internet, intellectual property must remain secure, and governance controls are non-negotiable.

This is where on-premise LLM deployment becomes a game-changer. By running AI models within their firewall, enterprises maintain full control of infrastructure, data pipelines, and compliance frameworks—without sacrificing the innovation that generative AI offers.

In this blog, we’ll explore why enterprises are turning to on-prem deployments, the unique benefits, key industry use cases, and how organizations can prepare for a secure AI future.

The Rising Enterprise Need for Secure AI

AI adoption across enterprises has surged over the last three years, driven by advancements in generative models and an urgent need to modernize business operations. According to a Gartner survey, nearly 80% of enterprises plan to adopt generative AI within the next three years, with many already piloting proof-of-concept initiatives. This acceleration, however, is not without hurdles—especially when sensitive data is at stake.

In industries like banking, healthcare, and defense, data confidentiality is mission-critical. A financial institution cannot risk exposing transaction histories to third-party APIs, just as a hospital cannot share patient data with an external model provider without violating HIPAA. Even in non-regulated sectors, enterprises are realizing that customer trust and intellectual property (IP) protection hinge on how data is managed during AI interactions.

Moreover, cybersecurity threats have amplified the stakes. Public APIs can inadvertently become gateways to vulnerabilities if not managed properly. Enterprises that prioritize data sovereignty, compliance, and risk mitigation are increasingly shifting toward on-premise or private AI deployments as a safeguard.

What emerges is a clear trend: while generative AI is transformative, its true enterprise potential lies in secure deployment models that align with both business objectives and regulatory requirements. On-premise LLMs offer a pathway to achieve this balance—innovation without compromise.

What is On-Premise LLM Deployment?

On-premise LLM deployment refers to running large language models entirely within an organization’s internal infrastructure or firewall, rather than relying on external cloud-based APIs. In this setup, enterprises host, manage, and govern the model on their own hardware or secure private environments.

This approach differs significantly from the public SaaS model of AI, where data must leave the enterprise environment to be processed by third-party providers. With on-prem deployment, enterprises retain end-to-end control—from data ingestion and model fine-tuning to monitoring and compliance enforcement.

There are three primary approaches enterprises adopt for on-premise AI deployment:

- Bare-metal servers: Dedicated GPU-powered infrastructure hosted in data centers.

- Private cloud setups: Virtualized environments operated behind the enterprise firewall, enabling elasticity while retaining data control.

- Hybrid deployments: A mix of on-prem and secure private cloud, where sensitive workloads remain local while less critical processes leverage cloud efficiency.

For organizations that value data sovereignty, compliance, and security, on-premise deployment ensures that sensitive information never leaves controlled infrastructure. It not only reduces exposure to external risks but also provides the flexibility to customize LLMs for proprietary use cases.

Why Enterprises Choose On-Prem LLM Deployment

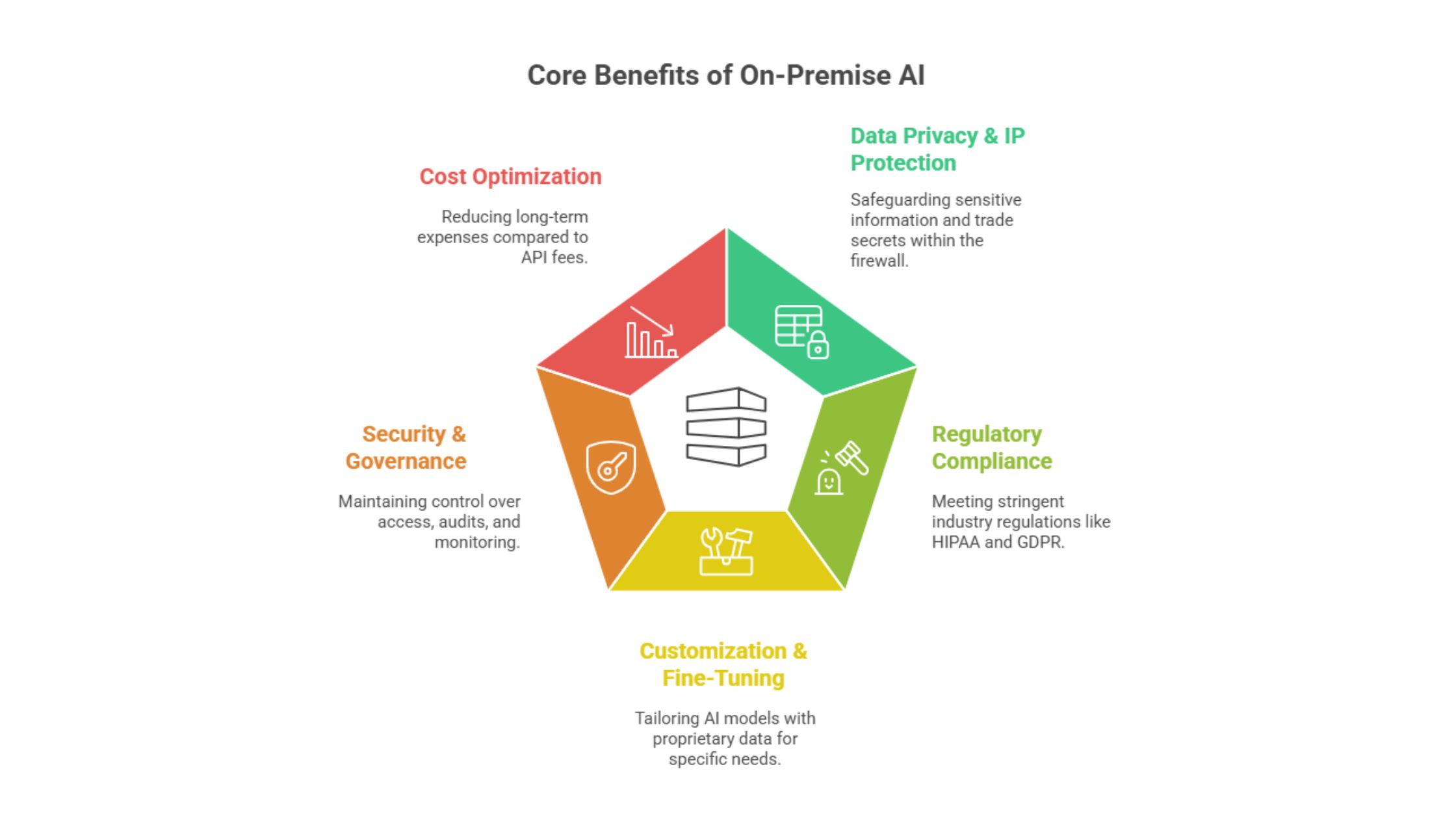

Enterprises don’t take the decision to host large language models within their own infrastructure lightly. On-premise deployment requires planning, investment, and specialized expertise. Yet, for organizations in regulated sectors—or those simply seeking more control—this approach delivers clear advantages. Below are the five primary reasons enterprises are embracing on-premise LLM deployment.

4.1 Data Privacy & IP Protection

Data has become the most valuable enterprise asset, and protecting it is paramount. When using public LLM APIs, sensitive inputs such as customer transactions, medical records, or proprietary R&D data could potentially be exposed or logged outside the organization’s control.

On-prem deployment ensures that all training data, prompts, and generated outputs remain within the firewall. This not only protects trade secrets but also builds confidence among stakeholders and customers that their information is never leaving the organization’s secure environment.

For instance, a global bank building customer-facing AI assistants can’t risk account details being shared with external model providers. Hosting the model in-house eliminates this risk while enabling highly personalized, secure interactions.

4.2 Regulatory Compliance

From GDPR in Europe to HIPAA in the U.S. healthcare system, regulations around data handling have become more stringent than ever. Enterprises operating across borders must comply with multiple overlapping rules.

On-premise deployment offers a compliance-friendly architecture by ensuring data never crosses geographic or jurisdictional boundaries. This enables organizations to demonstrate regulatory alignment and avoid heavy penalties.

For example, a pharmaceutical company conducting drug discovery must protect patient trial data. With an on-prem setup, the company can run generative AI pipelines while meeting all clinical data privacy requirements.

4.3 Customization & Fine-Tuning

Public LLMs are typically trained on generalized datasets, limiting their ability to address industry-specific or company-specific needs. On-prem deployment allows enterprises to fine-tune models with proprietary datasets, embedding institutional knowledge and domain-specific intelligence into the model.

This level of customization means a legal firm can create an AI assistant trained on case law and contracts, while a manufacturer can optimize models for predictive maintenance and process optimization.

Additionally, improving embeddings, context handling, and knowledge retrieval becomes more effective when customization happens within the enterprise environment. Companies can adopt approaches such as the Chunking Strategy for LLM Applications to enhance retrieval-augmented generation (RAG) pipelines.

4.4 Security & Governance

Security isn’t only about data protection—it’s also about governance. On-premise LLM deployment enables enterprises to implement strict role-based access, audit trails, and monitoring systems. This ensures that only authorized personnel interact with sensitive AI systems and that every interaction is logged for compliance.

CISOs and enterprise IT teams gain the ability to enforce governance frameworks at every stage of the AI lifecycle, from training data management to production monitoring. By centralizing control within the firewall, organizations reduce the risk of insider misuse and external breaches.

4.5 Cost Optimization in the Long Run

At first glance, building and maintaining GPU-powered infrastructure may seem expensive compared to tapping into pay-as-you-go API services. However, enterprises with large-scale AI use cases often find on-premise deployment more cost-effective in the long run.

Recurring API costs can escalate rapidly when running millions of queries per day or fine-tuning models with large datasets. On-prem deployments, while involving upfront investment, eliminate dependency on external providers and offer predictable cost structures.

Moreover, amortizing infrastructure costs over years of use—while enabling multiple AI projects on the same hardware—can lead to a favorable total cost of ownership (TCO).

In short, enterprises are choosing on-premise LLM deployment because it delivers what public APIs cannot: uncompromising security, compliance readiness, tailored customization, and long-term economic efficiency.

On-Prem vs Private Cloud Deployment

Enterprises exploring private LLMs often weigh two main options: on-premise and private cloud deployment. While both prioritize security and compliance, their suitability depends on industry, scale, and governance requirements.

When to Choose On-Prem LLM Deployment

- Organizations with stringent compliance obligations (e.g., defense, BFSI).

- Enterprises needing air-gapped environments for maximum security.

- Workloads involving highly sensitive intellectual property or national security data.

- Enterprises with large in-house IT and infrastructure teams capable of maintaining GPUs, clusters, and monitoring systems.

When to Choose Private Cloud LLM Deployment

- Enterprises seeking a balance of scalability and security.

- Industries where compliance permits secure private cloud use (e.g., pharma R&D).

- Organizations without the capacity to maintain on-prem hardware but still unwilling to expose data to public APIs.

- Companies wanting elastic GPU scaling during high-demand use cases (e.g., training surges).

Key Comparison: On-Prem vs Private Cloud LLM Deployment

| Feature / Factor | On-Premise LLM Deployment | Private Cloud LLM Deployment |

| Data Control | Full data sovereignty; never leaves firewall | Strong data control, but relies on vendor hosting |

| Compliance Fit | Ideal for highly regulated sectors (Healthcare, BFSI, Defense) | Suitable for industries with moderate regulations |

| Security | Air-gapped, maximum security with enterprise IT control | High security, but vendor-managed infrastructure |

| Customization | Deep fine-tuning and governance flexibility | Moderate customization, often tied to cloud vendor tools |

| Scalability | Limited by in-house hardware; requires upfront investment | Elastic scaling, pay for GPU usage as needed |

| Cost Structure | High initial CapEx, lower OpEx over time | Subscription or consumption-based pricing |

| Maintenance | Enterprise IT responsible for full lifecycle management | Vendor handles updates, but less transparency |

| Use Case Examples | Defense intelligence, BFSI fraud detection, government AI | Pharma R&D, manufacturing simulations, cross-border teams |

For enterprises that require absolute control, governance, and compliance assurance, on-prem remains the gold standard. However, when scalability and operational efficiency are key, private cloud can provide a middle ground—especially for organizations still building their in-house AI infrastructure capabilities.

Industry Use Cases for On-Prem LLMs

The advantages of on-premise deployment become most evident when applied to industry-specific use cases. Each sector has unique data sensitivity and compliance requirements that make public LLM APIs unsuitable. Below are examples of how on-premise deployment enables enterprises to innovate securely.

1. Healthcare – HIPAA-Compliant Clinical Documentation

Hospitals and healthcare networks handle electronic health records (EHRs), diagnostic data, and patient notes that fall under HIPAA regulations. Deploying LLMs on-prem ensures:

- Automated transcription and summarization of clinical notes.

- HIPAA compliance by keeping all patient data within the firewall.

- AI-powered decision support systems for doctors without risking sensitive health data exposure.

2. BFSI – Fraud Detection and Secure Client Advisory

Banks and financial institutions must comply with RBI, SEBI, and GDPR rules while also protecting customer trust. On-prem LLMs can:

- Detect fraud patterns in real-time using transaction histories.

- Generate secure customer-facing chatbots for personalized financial advisory.

- Ensure compliance with audit trails and strong governance controls.

3. Manufacturing – Proprietary Process Optimization

Manufacturers operate with trade secrets, proprietary workflows, and supply chain intelligence. With on-prem LLMs, they can:

- Automate process documentation and quality assurance.

- Deploy predictive maintenance models using historical machine data.

- Safeguard intellectual property from leaving enterprise networks.

4. Pharma – Drug Research & Clinical Trials

Pharmaceutical firms conduct drug discovery and clinical research that involves sensitive trial datasets and intellectual property. On-prem LLMs enable:

- Knowledge retrieval across R&D databases.

- Summarization of medical literature and trial reports.

- Secure collaboration among researchers while complying with regulatory audits.

5. Defense – Secure Intelligence Analysis

Defense and aerospace organizations demand air-gapped, maximum-security AI environments. With on-prem deployment, they can:

- Analyze intelligence reports in real time.

- Enhance cybersecurity defense with AI-driven anomaly detection.

- Train models on classified data without external exposure.

By aligning deployment with sector-specific regulatory frameworks and security needs, on-premise LLMs empower enterprises to harness generative AI without compromising compliance or confidentiality.

Challenges in On-Premise LLM Deployment

While the benefits of on-premise LLM deployment are compelling, enterprises must also be aware of the operational and technical hurdles that come with it. Unlike public APIs, where infrastructure is managed externally, on-prem solutions shift responsibility to the organization’s IT and AI teams.

1. Infrastructure Requirements

Running large language models demands high-performance GPUs, accelerators, and distributed computing clusters. These components are expensive and often in short supply, creating procurement and scaling challenges. Enterprises without established data centers may struggle to maintain the necessary capacity.

2. Skilled Workforce Shortage

On-prem LLM deployment requires expertise across machine learning engineering, DevOps, MLOps, and cybersecurity. Many enterprises lack internal teams with the depth of knowledge needed to set up, monitor, and optimize AI infrastructure effectively. Recruiting and training such talent can be time-consuming and costly.

3. Maintenance and Model Updates

LLMs evolve rapidly, with frequent releases and improvements in accuracy, efficiency, and safety. Ensuring that models remain up-to-date, patched, and optimized requires a structured update strategy. Enterprises that fail to keep pace risk running outdated or less secure AI systems.

4. Cost and Resource Management

Although on-prem deployment can lead to long-term savings, the upfront CapEx investment is substantial. Organizations must budget for hardware acquisition, setup, and continuous power and cooling costs. Without proper planning, infrastructure bottlenecks may reduce ROI.

5. Vendor Lock-In and Compatibility

Enterprises must carefully evaluate their technology stack to avoid lock-in with a single vendor’s hardware or software ecosystem. Compatibility with existing ERP, CRM, and data pipelines also requires strategic planning, or the deployment may face integration roadblocks.

In short, while on-prem deployment empowers enterprises with control and compliance, it also requires significant foresight, resources, and governance to overcome these challenges.

Best Practices for Successful On-Prem Deployment

Enterprises that succeed with on-premise LLM deployment follow structured strategies to balance performance, compliance, and cost efficiency. Below are proven best practices for ensuring smooth adoption.

1. Hardware and Infrastructure Planning

- Invest in GPU clusters or AI accelerators capable of handling large-scale training and inference.

- Design infrastructure with redundancy and scalability in mind to accommodate future AI workloads.

- Optimize for power efficiency and cooling to keep long-term operating costs manageable.

2. Data Pipeline Readiness

- Ensure enterprise data is cleaned, chunked, and indexed before feeding it into the model.

- Use optimized techniques for retrieval-augmented generation (RAG) to boost accuracy.

- Adopt tools for ETL (Extract, Transform, Load) to unify structured and unstructured datasets for training.

3. Governance and Monitoring Frameworks

- Establish role-based access controls (RBAC) to secure interactions with AI systems.

- Implement continuous monitoring with audit trails and anomaly detection.

- Define AI governance policies aligned with legal and ethical standards to mitigate compliance risks.

4. Skilled Workforce Development

- Train internal teams in MLOps, DevSecOps, and AI governance practices.

- Partner with experienced AI vendors for deployment support and knowledge transfer.

- Encourage cross-functional collaboration among data science, IT, and compliance teams.

5. Integration with Enterprise Systems

- Seamlessly connect LLMs with existing ERP, CRM, and business intelligence tools for maximum value.

- Use APIs and middleware to minimize friction during deployment.

- Focus on interoperability to avoid vendor lock-in and ensure future-proofing.

By combining robust infrastructure, clean data, strong governance, and workforce readiness, enterprises can mitigate risks and maximize ROI from on-premise LLM initiatives.

Why Choose AIVeda for On-Premise LLM Deployment

Enterprises exploring on-premise LLM deployment need more than just infrastructure—they need a trusted partner who understands the challenges of secure, scalable, and compliant AI adoption. This is where AIVeda stands out.

1. Expertise in Secure AI for Regulated Industries

We specialize in working with industries like healthcare, BFSI, pharma, manufacturing, and defense, where compliance and data sovereignty are non-negotiable. Our solutions are built to align with HIPAA, GDPR, RBI/SEBI, and other global regulations.

2. End-to-End Customization

Every enterprise has unique datasets and workflows. AIVeda offers custom fine-tuning of LLMs on proprietary data, ensuring the model reflects your business knowledge while maintaining privacy. From retrieval-augmented generation (RAG) to embedding optimization, our experts deliver solutions tailored to your needs.

3. Secure Deployment Models

Whether you require air-gapped on-premise systems or private cloud hosting, we design deployment architectures that safeguard your most valuable assets—your data and intellectual property.

4. Cost-Efficient AI Adoption

We help enterprises strike the right balance between CapEx and OpEx by optimizing infrastructure usage. Our deployment strategies are designed to lower long-term costs while enabling scalability across multiple AI initiatives.

5. Proven Track Record in Enterprise AI

AIVeda’s experience in large-scale AI solutions, conversational AI, and custom LLM development positions us as a reliable partner for organizations ready to bring generative AI inside their firewall.

With AIVeda, enterprises don’t just deploy AI—they gain a secure, future-ready foundation for innovation, compliance, and growth.

Future Outlook – The Business Case for On-Prem AI

The momentum behind enterprise AI adoption shows no signs of slowing. As organizations expand their generative AI initiatives, deployment architecture will become a key differentiator between those who innovate securely and those who expose themselves to risks.

According to Statista, global spending on AI systems is projected to reach over $300 billion by 2030, with a growing share dedicated to infrastructure and compliance-ready solutions (source). This trend underscores the importance of on-premise and hybrid models as enterprises seek more sovereign control over their AI ecosystems.

Looking ahead, enterprises are likely to embrace:

- Hybrid models, where critical workloads remain on-prem while scalable, low-risk processes leverage private cloud.

- Smaller, more efficient LLMs designed to run cost-effectively on enterprise-grade hardware.

- Stronger governance frameworks, ensuring ethical AI and compliance across jurisdictions.

The business case for on-prem AI goes beyond regulatory compliance. It empowers organizations to innovate faster, protect intellectual property, and build customer trust by keeping sensitive data in-house. For CIOs, CTOs, and CISOs, this makes on-premise deployment not just a technology choice, but a strategic imperative.

Conclusion

As enterprises scale their AI ambitions, the deployment model becomes as critical as the model itself. Public APIs may deliver speed, but they often compromise on the very principles that enterprises value most—security, compliance, customization, and cost predictability.

On-premise LLM deployment offers a powerful alternative, placing AI capabilities inside the firewall where sensitive data, intellectual property, and regulatory requirements can be safeguarded. For industries like healthcare, BFSI, pharma, manufacturing, and defense, this isn’t just a technology preference—it’s a strategic necessity.

The future of enterprise AI will be defined by trust and control, and organizations that act today will be positioned to lead tomorrow.

If your organization is ready to explore secure and scalable AI adoption, discover how AIVeda’s Large Language Models can help you deploy on-premise solutions tailored to your business needs.