Private LLM Development Services by AIVeda

Secure, Controlled, and Enterprise-Ready Generative AI

AIVeda’s private LLM development services enable enterprises to design, build, and deploy large language models within their own secure environments—ensuring complete data control, regulatory compliance, and tailored intelligence. Our private LLMs are engineered to operate on dedicated infrastructure, delivering high-performance generative AI without exposing sensitive data to public models or third-party platforms.

Key Features of Our Private LLM Development Services

Enterprise-Controlled Model Architecture

Our private LLM development services are designed around complete enterprise ownership. Models are deployed within your dedicated infrastructure—on-premise or private cloud—ensuring full control over data flows, model behavior, and access policies without reliance on shared or public AI platforms.

Domain-Specific Large Language Intelligence

AIVeda develops private LLMs trained and fine-tuned on enterprise-approved datasets. These models understand industry-specific language, internal documentation, workflows, and regulatory constraints—making them suitable for sectors such as BFSI, healthcare, legal, manufacturing, and government.

Secure-by-Design AI Deployment

Security is embedded across the entire LLM lifecycle. We implement encryption at rest and in transit, role-based access control, secure inference endpoints, and audit logging. Our development practices align with enterprise compliance requirements including GDPR, HIPAA, ISO 27001, and SOC 2.

Controlled Fine-Tuning & Model Governance

Our private LLM pipelines support supervised fine-tuning, instruction tuning, and reinforcement strategies while maintaining strict governance. Enterprises can update model behavior incrementally without retraining from scratch, ensuring performance improvements while preserving model stability.

Infrastructure-Optimized Performance

Private LLMs are engineered to align with your compute environment. We optimize inference throughput, memory usage, and latency through model compression, quantization, and hardware-aware deployment strategies—balancing performance with infrastructure cost efficiency.

Seamless Enterprise System Integration

AIVeda enables private LLMs to integrate smoothly with internal systems such as knowledge bases, ERPs, CRMs, document repositories, and analytics platforms. API-first design ensures secure interoperability across enterprise workflows and internal applications.

Use Cases of Private LLMs

Secure Enterprise Knowledge Assistants

Deploy private LLMs that interact with internal knowledge bases, policies, technical documentation, and training materials—delivering accurate, context-aware responses while ensuring sensitive enterprise data never leaves your environment.

Customer Support & Service Automation

Enable AI-driven support systems that handle tier-one queries, generate contextual responses, summarize conversations, and assist agents—without exposing customer data to public LLM APIs or external inference platforms.

Document, Legal, and Contract Intelligence

Use private LLMs to analyze, summarize, and classify contracts, compliance documents, and regulatory text. Ideal for legal, finance, and procurement teams that require confidentiality, traceability, and controlled access.

Financial and Risk Intelligence

Process internal financial reports, transaction data, and risk documentation using private LLMs to generate insights, detect inconsistencies, and support decision-making—while maintaining strict data governance and auditability.

Healthcare and Life Sciences Applications

Support clinical documentation workflows, medical knowledge retrieval, and internal research analysis using private LLMs deployed within compliant environments. Designed to align with healthcare data protection standards and controlled access requirements.

Enterprise Search & Knowledge Retrieval

Power advanced search, summarization, and retrieval across large volumes of internal enterprise content—emails, wikis, SOPs, product manuals—using private LLMs integrated with secure retrieval pipelines.

Why Choose AIVeda’s Private LLM Development Services

Enterprise-Grade AI Engineering

AIVeda brings deep expertise in large-scale AI systems and enterprise deployments. Every private LLM is engineered for production readiness—validated for reliability, performance, and long-term maintainability within enterprise environments.

Complete Data Ownership and Control

Our private LLM solutions ensure your data, prompts, and outputs remain fully contained within your infrastructure. Enterprises retain absolute control over model access, usage policies, and data flows—eliminating risks associated with shared or public AI platforms.

Custom-Built for Your Technology Stack

Private LLMs developed by AIVeda integrate seamlessly into your existing enterprise ecosystem. From secure APIs to microservices and internal platforms, models are tailored to align with your workflows, governance standards, and system architecture.

Optimized Performance at Scale

We design private LLMs to deliver consistent, low-latency inference under real-world workloads. Through infrastructure-aware optimization and efficient serving pipelines, models maintain high accuracy and responsiveness as usage scales.

Trusted by Enterprise Innovators

Organizations across regulated and data-sensitive industries rely on AIVeda for private LLM development that balances security, performance, and operational reliability—without compromising compliance or control.

Proven MLOps and Model Governance

Our MLOps frameworks automate fine-tuning, versioning, deployment, monitoring, and rollback. This ensures private LLMs remain auditable, reproducible, and aligned with enterprise governance requirements throughout their lifecycle.

Technical Stack

AI and Large Language Models

PyTorch / TensorFlow – for large language model training, fine-tuning, and optimization

Parameter-Efficient Fine-Tuning (PEFT) Techniques – for adapting large models without full retraining

Quantization and Model Compression –for optimized inference within enterprise infrastructure

Natural Language Processing (NLP)

Hugging Face Transformers, SentencePiece – for tokenization, inference, and controlled fine-tuning

LangChain, LlamaIndex –for retrieval-augmented generation (RAG), tool orchestration, and secure contextual retrieval

Infrastructure, Cloud, and MLOps

On-Premise, Private Cloud, or Hybrid Environments – based on enterprise deployment requirements

Containerization with Docker and Kubernetes – for scalable, isolated model serving

CI/CD Pipelines – for automated deployment, versioning, and updates

Monitoring with Prometheus + Grafana – for real-time observability, performance tracking, and reliability

Private AI. Built for Enterprise Reality.

AIVeda’s private LLM development services enable organizations to deploy powerful generative AI systems without compromising data security, compliance, or operational control. Build enterprise-grade intelligence tailored to your domain—fully owned, fully governed, and engineered for production at scale.

Our Recent Posts

We are constantly looking for better solutions. Our technology teams are constantly publishing what works for our partners

Private LLM Use Cases by Function: Legal, Support, Compliance, and Operations

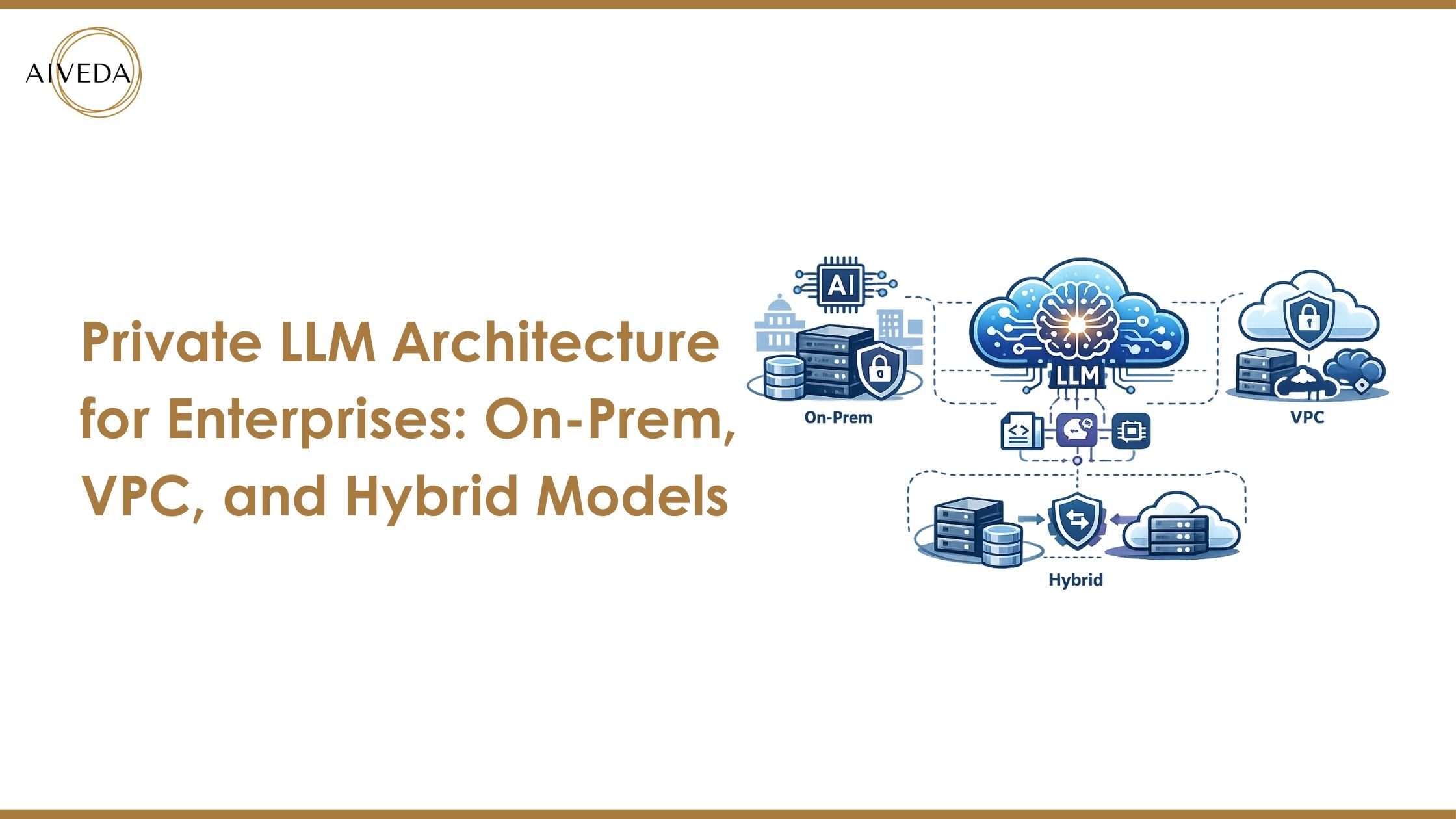

Private LLM Architecture for Enterprises: On-Prem, VPC, and Hybrid Models

Private LLM vs Public LLM: How Enterprises Choose Security, Control, and Long-Term AI ROI

What we do

Subscribe for updates

© 2026 AIVeda.