Imagine a global insurance firm. Every month, thousands of claims documents flood in—policies, incident reports, legal assessments. The firm implemented a privately-hosted large-language-model solution so internal teams could query and summarise the data on-premises without ever exposing sensitive customer records to a public cloud model. Within six months, they reduced document-processing time, while preserving full control over compliance, audit trails and data governance.

This is the kind of enterprise transformation where private LLM shifts from theory to mission-critical reality. With 78% of organisations now using AI in at least one business function, the pressure on businesses to deploy solutions that are secure, scalable and compliant has never been higher.

In today’s enterprise landscape, private LLM use cases are no longer a niche experiment but a strategic imperative. Choosing a private model means you keep your data inside your firewall, scale usage across departments without leaking IP, and meet stringent regulatory demands across regions. In short, it’s about turning language-models into enterprise assets with enterprise confidence.

In the sections that follow, we’ll explore the top ten high-impact private LLM use cases for enterprises—showing you where the value lies, where the risks hide, and how you can move from pilot to production with speed and control.

Why Enterprises Are Adopting Private LLMs

Private LLMs bring AI innovation inside the walls of your organization—where your data stays encrypted, your IP remains protected, and your AI outputs reflect your company’s unique operational context. This shift has made private LLM use cases the preferred path for enterprises that view data not as an asset to be shared, but as a competitive moat to be guarded.

The advantages are tangible:

- Privacy and Data Ownership: Private models ensure sensitive datasets never leave your secured infrastructure.

- Customization and Domain Relevance: Enterprises can fine-tune the model on proprietary workflows, language, and documentation.

- Scalability with Compliance: Private deployments allow enterprises to expand AI usage without breaching regulations.

- Continuous Fine-Tuning: Private control enables regular optimization using new internal datasets, improving precision over time.

Enterprise Use Cases of Private LLMs

Public models may offer accessibility, but they fall short where data sensitivity, performance tuning, and regulatory accountability intersect. For a modern enterprise, the real ROI comes not from using AI, but from owning it—and that’s exactly what private LLM use cases make possible.

Use Case 1: Intelligent Document Processing and Knowledge Retrieval

In industries like insurance, healthcare, and law—where a single misplaced clause or missed update can trigger financial or legal exposure—document processing has always been a high-stakes, high-effort function. Take a global healthcare provider, for instance: its compliance teams handle thousands of medical records and audit forms each week. Before deploying a private model, document review cycles stretched across departments, and finding a single clause in archived PDFs could take hours.

To know more, read our blog why healthcare needs private LLMs for compliance.

After integrating a secure, internally fine-tuned LLM, the same team can now query any document in seconds “Show me all cases with missing patient consent forms in Q2”—and get precise, contextual responses without sharing a byte of patient data outside their firewall. That’s the power of private LLM for document processing in action.

Private LLMs excel at turning unstructured enterprise data into structured intelligence. They can read, summarize, classify, and extract entities from millions of pages—from claims and contracts to compliance reports—with human-like accuracy. More importantly, they do this securely within the organization’s environment, ensuring sensitive data never leaves internal systems.

In legal firms, this means automated contract review and precedent search. In insurance, it translates to faster claims validation. In healthcare, it supports clinical documentation, case retrieval, and audit preparation. The result is consistent: faster insights, lower human overhead, and airtight data privacy.

In a world where compliance and speed are equally non-negotiable, private LLM use cases for document processing help enterprises achieve both—transforming static documents into dynamic knowledge systems that evolve with the business.

Use Case 2: Secure Conversational AI for Customer Support

Suppose a leading bank faced a dilemma: its customer support chatbots could resolve basic queries, but anything involving sensitive account details had to be escalated to human agents due to strict GDPR requirements. This created delays, inconsistent responses, and rising operational costs. The turning point came when the bank deployed a private, domain-trained LLM inside its own infrastructure. Within weeks, resolution rates improved by 40%, while customer data never left the organization’s secure perimeter.

That’s one of the most effective private LLM use cases in customer support—building conversational systems that are both intelligent and compliant. Unlike public models that process data in third-party environments, private deployments let enterprises manage and monitor every interaction in real time, ensuring that no sensitive data is exposed, stored, or reused for external training.

Private LLMs enable enterprises to:

- Maintain complete data sovereignty: All prompts, responses, and logs stay within the company’s network, satisfying GDPR, HIPAA, and internal audit requirements.

- Personalize at scale: Fine-tuned on historical chat data and product manuals, private models can mirror your brand’s tone and context while maintaining accuracy across thousands of queries.

- Ensure reliability and security: By integrating internal APIs and business logic, responses stay contextually correct and operationally safe.

For global enterprises handling regulated data—banking, insurance, healthcare, or even government services—the difference between a public chatbot and a private conversational agent is not just in accuracy, but in accountability.

Use Case 3: Internal Search and Enterprise Knowledge Assistant

Every enterprise struggles with the same hidden inefficiency—knowledge fragmentation. Teams store information across CRMs, emails, project management tools, document drives, and internal wikis. Finding a single answer often means pinging multiple colleagues, searching ten different systems, or digging through outdated PDFs.

Global consulting firms can tackle this by deploying a Private LLM-powered internal assistant trained on their proprietary documentation, past client reports, and process manuals. THe result? Employees can query the system as naturally as talking to a colleague—“What’s our data retention policy for EU clients?” or “Show me past proposals related to supply chain optimization in retail.” The assistant can deliver context-aware, verified responses within seconds, saving hundreds of work hours every month.

Unlike traditional enterprise search tools that rely on rigid keyword matching, private LLMs understand intent, context, and semantics—connecting dots across unstructured data sources.

For enterprises, this means:

- Unified access to institutional knowledge: Private LLMs act as an intelligent front-end to fragmented data silos, providing a single interface for employees.

- Data security and access control: Sensitive or role-based content remains restricted; the model operates within internal governance boundaries.

- Continuous learning: As new documents, policies, or projects are added, the LLM automatically adapts, ensuring responses reflect the latest updates.

In a world where enterprise data doubles every 12 months, context-aware search is no longer optional—it’s a competitive necessity. By transforming static repositories into dynamic, conversational knowledge systems, Private LLM use cases for internal knowledge assistants help organizations preserve institutional intelligence and make it actionable at scale.

Use Case 4: Code Generation and Software Development Acceleration

This is one of the most transformative private LLM use cases in software development, where efficiency meets innovation without sacrificing IP security. Unlike public code assistants that risk leaking sensitive logic into shared training data, a private model is trained exclusively on an enterprise’s internal ecosystem—ensuring that every generated line of code remains compliant, consistent, and confidential.

Private LLMs empower enterprises to:

- Accelerate development cycles: Automatically generate function stubs, unit tests, or entire service layers aligned with internal frameworks.

- Enhance code quality and maintainability: Context-aware suggestions minimize syntax errors and enforce internal coding standards.

- Safeguard intellectual property: Since the model is deployed within the organization’s controlled environment, no proprietary logic or customer data leaves the perimeter.

- Enable continuous learning: The model evolves with new commits and project updates, reinforcing best practices across teams.

For businesses navigating aggressive release timelines and tight budgets, automation is no longer optional—it’s strategic. With private LLM use cases in software development, enterprises do not just code faster; they code smarter, securely leveraging their own knowledge base to build and deploy high-quality applications at scale.

Use Case 5: Compliance, Legal, and Risk Management Automation

When a leading global bank receives simultaneous compliance updates from five regulatory bodies, its legal team has to spend weeks interpreting overlapping clauses and rewriting policies. Despite using automation tools, the real challenge is not reading the rules—it is understanding how they connect to internal processes. This is when the company should deploy a private LLM trained on its own compliance archives, legal templates, and past audit reports.

Within days, the model could summarize new regulations, flag high-risk clauses, and generate draft versions of policy updates—all while keeping every document inside the bank’s private infrastructure. It did not just accelerate workflows; it made compliance predictable.

That’s the value behind private LLM use cases for compliance. Instead of outsourcing interpretation to external AI systems, enterprises can now internalize regulatory intelligence—securely, and with context.

Private LLMs can read and reason through thousands of pages of legislation, identify conflicts, and even suggest language that aligns with existing policies. They act as tireless analysts—always up to date, always in your control.

For legal and compliance leaders, that means:

- Instant clarity: Quickly understand new laws or amendments without manual research marathons.

- Risk awareness in real time: Get notified when internal documents contain outdated or noncompliant phrasing.

- Confidential by default: Sensitive contracts and client data never leave the enterprise ecosystem.

In today’s regulatory environment, where one missed update can cost millions, private LLM for compliance transforms risk management from reactive firefighting into proactive legal foresight—and that’s a shift every enterprise needs to make.

Use Case 6: Personalized Employee Training and Learning Systems

When a large consulting firm onboards over 3,000 new analysts across continents, their biggest challenge is not the logistics—it is consistency. Each region had its own onboarding material, scattered across PDFs, intranet pages, and archived decks. Employees were learning differently, unevenly, and often inefficiently.

That can be changed with the introduction of a private LLM-powered learning assistant, trained entirely on internal documents, client case studies, and project best practices. Instead of static slide decks, employees could now ask the assistant, “How do we structure a risk assessment for fintech clients?” or “Show me examples of successful supply chain optimization projects.” The answers are contextually accurate, role-specific, and sourced from the company’s own institutional knowledge.

That’s the promise of private LLM in employee training—learning that adapts to the individual, not the other way around.

Private LLMs can assess an employee’s existing knowledge, personalize learning paths, and deliver contextual micro-lessons in real time. They can explain complex workflows in plain language, offer follow-up exercises, and even generate on-the-job simulations—all while protecting proprietary content within the enterprise’s infrastructure.

For organizations, this means:

- Adaptive learning at scale: Each employee receives a personalized training experience aligned with their department, skill level, and project goals.

- Institutional knowledge retention: Internal expertise becomes accessible long after senior employees move on.

- Secure and compliant learning: All materials, datasets, and conversations remain private — critical for regulated sectors like finance, defense, or healthcare.

In a hybrid workplace where continuous upskilling is essential, private LLM use cases in employee training help enterprises turn their internal data into an always-on mentor — one that learns, evolves, and grows with the organization itself.

Use Case 7: Market Intelligence and Research Analysis

When a leading manufacturing enterprise notices a silent slowdown—not in machines, but in people. New hires taking months to reach peak efficiency. Training modules are outdated, scattered, and too generic to match the pace of evolving tools and processes. The leadership realizes their employees did not need more training content—they needed smarter training guidance.

That’s when they can turn to a private LLM fine-tuned on their internal manuals, SOPs, performance data, and project archives. Within weeks, this model can begin acting as a personal learning companion—answering role-specific questions, suggesting quick refreshers before audits, and even identifying knowledge gaps across departments. The result? Employees not just learn faster; they learn relevantly.

This is where private LLM Use Cases in employee training truly shine. Unlike generic e-learning systems, private models build context—they know the company’s tools, its compliance rules, its vocabulary. They deliver answers rooted in enterprise knowledge, not public internet data.

The result is a culture of continuous learning that evolves with the business—secure, adaptive, and deeply personal. In every conversation, the model becomes what every organization quietly needs: a patient mentor who never forgets and always speaks your company’s language.

Use Case 8: Finance and Audit Automation

For a global logistics firm, the quarterly audit used to feel like a storm—endless spreadsheets, reconciliations, and late-night reviews. Every department spoke its own data language, and small discrepancies often went unnoticed until they turned into financial red flags. The finance head knew they didn’t need more tools—they needed clarity.

When they introduced a private LLM trained on internal ledgers, invoices, and historical audit trails, the shift was immediate. The model could read complex entries, detect anomalies, and generate preliminary audit summaries that highlighted inconsistencies before humans even looked at them. It was not replacing the auditors—it was amplifying them.

That’s the strength of private LLM use cases in finance automation. They bring pattern recognition, accuracy, and consistency to some of the most detail-heavy workflows in enterprise operations — without letting a single piece of financial data leave the organization’s secure walls.

Private LLMs can parse years of balance sheets, flag duplicate transactions, and explain why a particular variance appeared in this quarter’s reports. They can generate executive summaries with contextual reasoning, not just numbers. And because they operate inside enterprise-controlled environments, every insight is traceable, every recommendation auditable.

In finance, time is money—but so is precision. With a private LLM in finance automation, enterprises no longer choose between the two. They gain both—faster closes, cleaner audits, and a level of financial transparency that keeps leadership informed and regulators satisfied.

Use Case 9: Supply Chain Optimization and Vendor Communication

A consumer electronics brand faced the same problem every quarter — demand forecasts that never quite matched reality. A minor delay from a supplier in Taiwan would ripple through warehouses in Germany and retail shelves in Dubai. The supply chain team had data, but not insight — spreadsheets couldn’t tell them why patterns were shifting or when to act.

That changed when the company implemented a private LLM connected to its ERP, procurement logs, and vendor communication threads. Suddenly, the model wasn’t just reading data — it was interpreting it. It could predict demand surges, flag at-risk suppliers, and even draft contextual responses to vendors, all within the company’s secure environment.

That’s the quiet revolution of private LLM use cases for supply chain management — turning reactive logistics into predictive intelligence.

A private model understands the rhythm of an enterprise’s operations — the seasonality, the shipping timelines, the vendor reliability scores — and uses that context to make informed recommendations. It doesn’t just automate workflows; it keeps them aligned.

For enterprises stretched across continents, where one missing shipment can mean millions in lost revenue, this kind of intelligence becomes indispensable. With private LLM for supply chain management, organizations gain the ability to see around corners — anticipating disruptions before they happen and responding with precision that only comes from knowing your data inside out.

Use Case 10: HR and Recruitment Intelligence

A global IT services firm was hiring at scale — hundreds of roles, thousands of resumes, and endless rounds of screening. Recruiters were buried under repetitive tasks, while qualified candidates slipped through the cracks. The leadership team realized that the issue wasn’t a lack of talent — it was a lack of visibility.

So, they deployed a private LLM fine-tuned on past hiring data, performance reviews, and job descriptions. Within weeks, it began identifying patterns the human eye missed — which profiles led to long-term success, what skill combinations performed best in specific teams, and where internal talent could be reallocated before hiring externally. Recruiters no longer spent hours filtering; they spent minutes deciding.

That’s the efficiency edge of private LLM use cases in HR and recruitment. These models bring intelligence and context into every person’s decision — from resume screening to internal mobility planning. They can read between the lines of experience, match nuanced skills with evolving roles, and even perform sentiment analysis on employee feedback to detect engagement risks early.

Unlike public AI models that rely on external datasets, private deployments learn from an organization’s own people data — securely, responsibly, and without bias reinforcement from the internet. The result is faster hiring. It’s smarter workforce planning — powered by insight, not instinct.

In the enterprise world, talent is the true differentiator. With private LLM in HR and recruitment, organizations do not just hire better—they understand their people better.

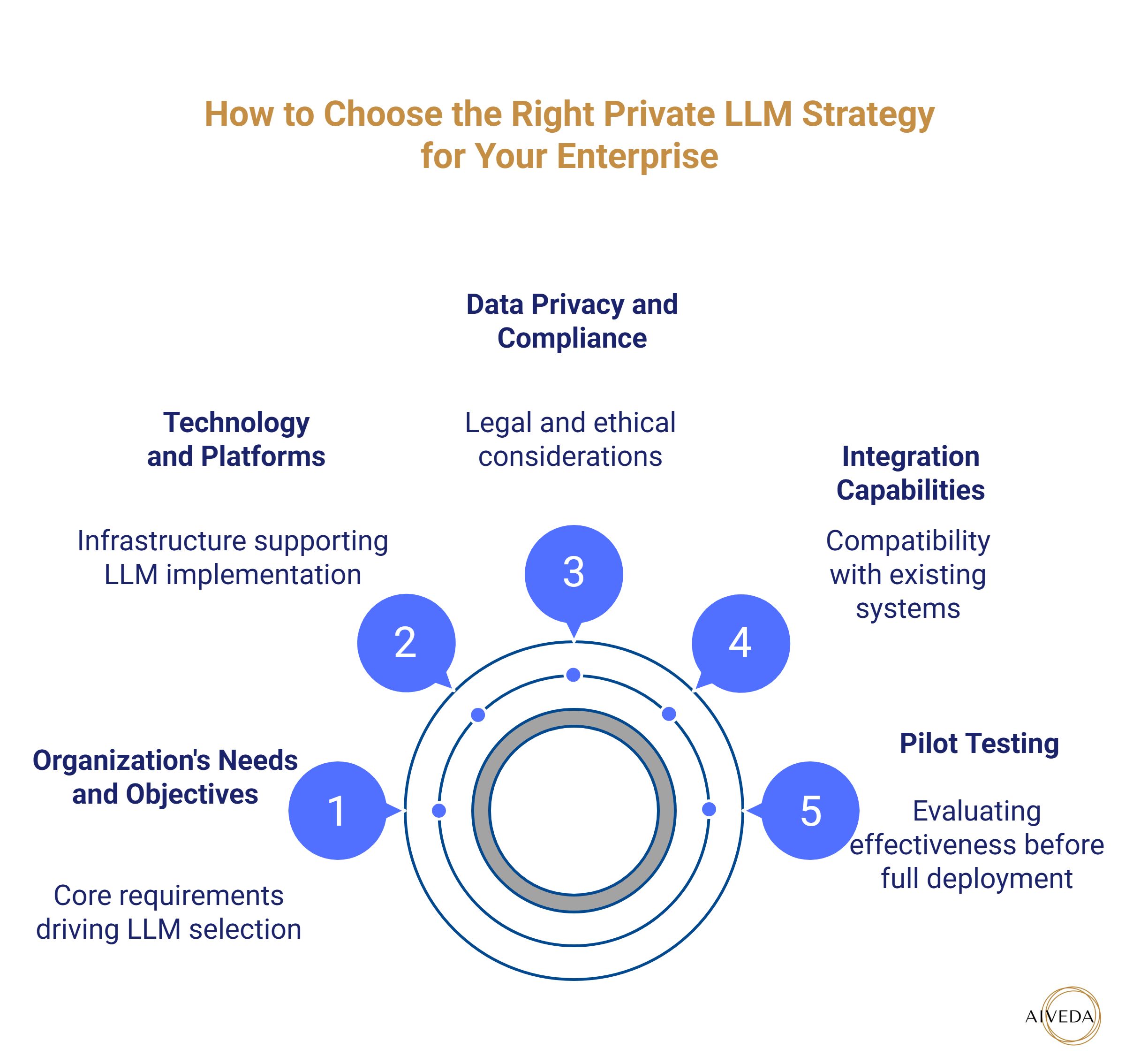

How to Choose the Right Private LLM Strategy for Your Enterprise

Every enterprise wants the power of AI—but few are ready for the complexity that comes with it. The question is not whether to adopt LLMs, but how to align them with your systems, your data, and your security boundaries. That’s where strategy becomes everything.

When choosing private LLM use cases for enterprise integration, the foundation lies in selecting the right deployment model:

- On-premise: Full control, zero compromise. Ideal for organizations handling confidential data or operating under strict regulations.

- Hybrid: The best of both worlds — private fine-tuning and secure cloud scalability. Suitable for enterprises balancing performance and compliance.

- Secure cloud: Fast, flexible, and cost-efficient for companies that prioritize speed of deployment but maintain strict data encryption and access governance.

But deployment is only half the equation. Integration defines success. A Private LLM should connect effortlessly with your internal systems—CRMs, HRMS, data lakes, or analytics dashboards—through well-governed APIs. Without that connection, intelligence remains isolated.

In reality, building a Private LLM strategy is not about infrastructure or algorithms. It’s about alignment — between technology and business intent. The goal is simple: create a model that understands your data, speaks your processes, and scales with your ambitions.

That’s what makes choosing private LLM for enterprise integration not just a technical decision — but a strategic one.

Challenges in Implementing Private LLMs (and How to Overcome Them)

Every enterprise wants control over its data—until it meets the complexity that comes with it. Deploying a private LLM sounds simple in theory: host it internally, feed it proprietary data, and secure every endpoint. In practice, it’s an orchestration of moving parts—data readiness, governance, performance tuning, and cost alignment — all under one roof.

A global energy firm learned this early. Their first internal LLM prototype delivered impressive accuracy but inconsistent behavior across departments. The reason wasn’t the model—it was the data. Fragmented records, unstructured files, and conflicting schemas made it impossible for the model to “understand” the enterprise as one entity. The lesson was clear: good models fail on bad data.

That’s one of the core private LLM deployment challenges—ensuring data alignment before fine-tuning begins. Enterprises must clean, unify, and contextualize internal datasets so the model can reflect business realities, not data silos.

Then comes governance—setting clear boundaries on how the model is trained, what it can access, and how outputs are validated. Without that structure, private doesn’t always mean secure. A well-governed model maintains audit trails, enforces role-based permissions, and ensures traceability across every interaction.

Finally, there is cost optimization—the silent variable. Hosting large models, maintaining GPU infrastructure, and retraining over time can quickly inflate budgets. The answer lies in scaling intelligently: start small, measure value, and expand where ROI is proven.

These challenges aren’t deterrents — they’re design parameters. Enterprises that address them early build not just a working model, but a sustainable one. Because in the long run, Private LLMs aren’t a plug-in solution — they’re an ecosystem. And navigating private LLM use cases and deployment challenges well is what separates experimentation from true enterprise transformation.

The Future of Private LLMs in Enterprise AI Transformation

The first wave of enterprise AI was about experimentation. The next will be about precision. As organizations mature in their use of language models, the question is no longer “Can we build one?” but “How deeply can it understand us?”

Private LLMs are entering that phase of evolution. The future isn’t just bigger models — it’s smarter ecosystems. We’re seeing the rise of multi-agent systems, where specialized models collaborate across departments: one managing compliance queries, another analyzing customer sentiment, a third optimizing resource allocation. Together, they form an adaptive intelligence network that mirrors how real enterprises operate — not in silos, but in conversation.

Retrieval-Augmented Generation (RAG) is becoming the quiet revolution underneath it all. By connecting Private LLMs directly to real-time enterprise databases and document repositories, responses are no longer based on static training but live knowledge — verifiable, current, and contextual. It’s how an AI assistant can now reference last quarter’s performance data or quote directly from an internal memo.

Then there’s the emergence of fine-tuned vertical models—LLMs specialized for industries like finance, healthcare, logistics, or legal. Instead of being generalists, these models understand compliance clauses, clinical language, or risk metrics with precision. They speak the language of the enterprise — literally.

This is where private LLM use cases shaping enterprise AI come full circle. The value shifts from automation to augmentation — models that do not just answer, but advise. They will become embedded into workflows, transforming static systems into intelligent environments that anticipate business needs.

The enterprises leading this shift will be the ones that see Private LLMs not as tools, but as long-term assets — trained on their DNA, powered by their data, and evolving with their strategy.

How We Simplify Private LLM Implementation for You

Building a Private LLM is not the hard part—making it work seamlessly inside an enterprise is. That’s where most AI projects stall: great technology, poor integration. We designed our enterprise LLM services to close that gap — making advanced AI usable, secure, and fully aligned with how your business actually operates.

Our LLM model is built for three essentials that every enterprise demands—scalability, security, and control. It adapts to your infrastructure, respects your data boundaries, and delivers intelligence where it’s needed most — inside your ecosystem. From the first dataset to production rollout, every stage is handled end-to-end, with precision and transparency.

We help you:

- Deploy with confidence: Whether on-premise, hybrid, or private cloud, our architecture scales to your governance and compliance needs.

- Train with context: Every model is fine-tuned on your internal data — policies, product guides, communication records — so it speaks your organization’s language from day one.

- Integrate with ease: Our API-first approach ensures your Private LLM connects smoothly with CRMs, ERPs, ticketing tools, and existing analytics systems — no disruption, no friction.

- Stay compliant: Data never leaves your controlled environment. Governance frameworks are embedded into every workflow.

With our approach, private LLM integration is made easy. And we handle the complexity behind the scenes so your teams can focus on what matters: results.

The outcome is a Private LLM that does not just exist in your enterprise — it becomes part of it. Scalable. Secure. Seamless.

Conclusion

Enterprises are no longer asking if they need AI—they are asking how much of it they can truly own. That’s the defining shift of this decade. Private LLMs give organizations what public models can’t: autonomy. The freedom to use intelligence without surrendering control.

Across industries, private LLM use cases in enterprise innovation are proving that the future of AI isn’t about outsourcing capability—it’s about internalizing it. When knowledge stays within the enterprise, models become more accurate, more contextual, and more aligned with business intent. Decisions get faster, compliance gets stronger, and every workflow becomes a little more intelligent.

Private models do not just enhance productivity—they redefine trust in automation. And in the enterprise world, trust is everything.

Discover how our enterprise LLM solutions can help you deploy your private LLM use cases securely and efficiently. Contact us to know more!