Generative AI is rapidly reshaping how modern companies operate, but it’s also exposing serious vulnerabilities for enterprises that rely on public AI platforms. Today, organizations need more than just powerful AI; they need secure, compliant, and fully controlled systems. It’s no surprise that over 27% of organizations have already restricted the use of public GenAI tools due to data-exposure risks, highlighting a major shift toward secure alternatives such as private LLM deployment.

A private large language model gives enterprises a dedicated AI system deployed entirely within their own environment. Whether it is through on-prem LLM infrastructure, a private cloud LLM deployment, or a hybrid approach. Instead of sending sensitive information to public services, you deploy a private large language model that processes data securely inside your controlled environment. This means your AI understands your proprietary knowledge, follows your governance rules, and strengthens your compliance posture.

As boardrooms push for AI-driven efficiency while regulators tighten expectations, private LLMs have become essential for organizations handling sensitive data, regulated workflows, and high-stakes decision-making. For forward-thinking enterprises, the move to private, self-hosted, or on-premise LLMs isn’t just an upgrade. It’s a strategic shift toward long-term security, competitive advantage, and operational resilience.

What Is a Private LLM?

A private LLM is a large language model deployed and controlled entirely inside an organization’s secure environment:

- A private cloud VPC

- An on-prem LLM infrastructure

- Or a hybrid setup that blends both

It ensures that all data, logs, computation, and model execution stay within the company’s boundary, giving enterprises full autonomy.

Companies like AIVeda specialize in designing and deploying private cloud LLM deployments that meet enterprise security and performance requirements.

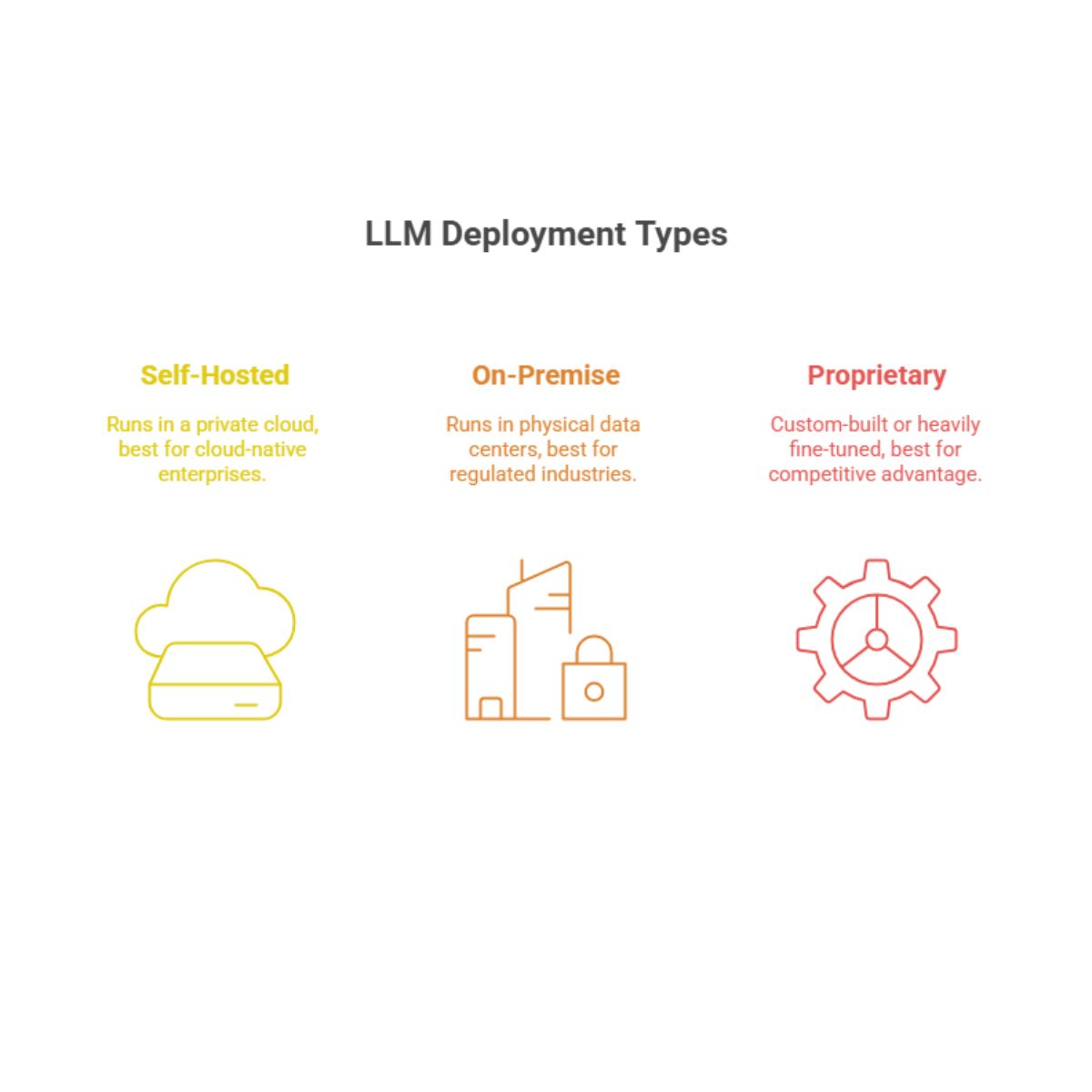

Common Private LLM Deployment Models

All of them are classified as “private LLM”; the fundamental differentiator is control and isolation, not specific infrastructure.

What Is Not a Private LLM

A private LLM is not:

- Public AI tools like ChatGPT, Gemini, Claude

- Standard SaaS AI APIs

- Open-source LLMs running on someone else’s cloud

- APIs that export your data to third-party servers

- If you do not control the model runtime, it is not private.

For a complete walkthrough of building and private LLM deployment, check out our private LLMs development guide

Why Enterprise Leaders Are Moving to Private LLMs

Think of an LLM as an advanced digital brain. It can:

- Answer questions

- Summarize complex information

- Write content

- Classify documents

- Analyze patterns

- Assist with decisions

- All using natural, human-like language.

But while the “brain” is powerful, where it lives matters even more for enterprises.

Private LLM vs ChatGPT: Business Case for Control

Cloud LLM vs On-Prem LLM: Deployment Choices Explained

Self-Hosted in the Cloud (Private VPC/Subnet)

Best for: Most businesses looking for cost-effective management, quick implementation, and flexibility.

Why it works-

- Elastic Scaling: Optimize costs by effortlessly scaling up during periods of high usage and down when demand is lower.

- Managed Infrastructure: Your cloud provider takes care of patches, upgrades, and infrastructure upkeep, so you can wave goodbye to hardware administration.

- Quick Deployment: Start operating in a matter of weeks rather than months.

Trade-off: The infrastructure of a third-party provider hosts your data. Maintaining security requires stringent network isolation and encryption.

On-Premise LLM

Private LLM deployment, deploy private large language model, on-premise LLM deployment, custom LLM deployment, on-prem LLM infrastructure, private cloud LLM deployment

Best for: Businesses with strict security requirements or legal obligations (banking, defense, healthcare).

Why it works-

- Total Control: Everything remains in-house, including the hardware, physical security, and access control.

- Data Residency Compliance: Ideal for sectors where data must adhere to regulations or remain within particular jurisdictions.

- Air-Gapped Deployment: For increased security, run completely offline if necessary, with no connection to the outside world.

Trade-off: Having a lot of control entails a lot of responsibility. Everything is managed by your team, including maintenance, disaster recovery, scaling, and monitoring.

Hybrid Deployment: The New Standard

Most enterprises choose hybrid setups:

- Cloud for training and scale-heavy tasks

- On-prem for sensitive operations and restricted data

This balances cost, performance, and compliance.

Core Benefits of Private LLMs for Enterprises

1. Privacy & Compliance

Private LLMs ensure:

- Zero external data sharing

- Alignment with GDPR, HIPAA, SOC 2, ISO

- Audit-friendly governance

- Secure access controls

2. Customization & Competitive Advantage

With custom LLM deployment, companies can:

- Fine-tune models to internal language and workflows

- Maintain brand tone and policy alignment

- Build proprietary AI advantages that competitors cannot copy

3. Cost Control at Scale

Instead of token-based pricing:

- Predictable cost structure

- Clear long-term ROI

- No usage volatility

- No vendor lock-in

Companies benefiting from private cloud LLM deployment often lower costs when usage exceeds certain thresholds.

4. Performance & Reliability

Private deployments offer:

- Ultra-low latency

- No API rate limits

- No reliance on external uptime

- Optimized scalability

This makes them ideal for mission-critical workloads.

Enterprise Use Cases: Where Private LLMs Deliver ROI

Customer Support Automation

Private LLMs trained on your support tickets, FAQs, and product documentation can dramatically reduce workload. Enterprises see a 40-60% drop in ticket volume, faster resolutions, and responses that are tailored specifically to their products and policies.

Knowledge Management

Employees can use a secure RAG-enabled private LLM to ask natural language inquiries and obtain accurate replies from internal documents right away. This reduces information bottlenecks and increases efficiency across teams.

Contract and Legal Review

By training a model on your contracts and internal legal frameworks, you can automatically flag risky clauses, extract key terms, and propose redlines. This allows your legal team to focus only on high-risk items and speeds up contract cycles by nearly 60%.

Code Generation and Developer Productivity

A private LLM trained on your codebase and architecture patterns provides developers with context-aware suggestions that match your standards. This improves code quality, accelerates onboarding, and boosts overall engineering efficiency.

Financial Analysis and Risk Management

Custom private LLMs can use your previous deals, market knowledge, and risk rules to make wiser, faster decisions. They help identify risks early, evaluate deals more accurately, and align recommendations with your firm’s specific exposure.

For detailed ROI examples and case studies, see our:

enterprise use cases for private LLMs

High-ROI Enterprise Use Cases for Private LLMs

Private LLM deployments unlock significant ROI in functions such as:

Customer Support Automation

Reduce ticket volume using AI copilots trained on internal knowledge.

Knowledge Management with RAG

Connect the LLM to enterprise documents for real-time retrieval.

Contract & Legal Review

Accelerate redlining, compliance checks, and risk identification.

Internal Code Generation

Boost developer productivity with secure, in-house AI copilots.

Financial Analysis & Risk Modeling

Automate investment memos, credit assessments, and fraud detection.

Companies like AIVeda help enterprises deploy private large language models across these high-impact areas.

When Should an Enterprise Use a Private LLM?

Clear Signals You Need a Private LLM

A private LLM is required when your company handles highly sensitive data, operates under rigorous legal frameworks, or requires extensive domain-specific information. If you’re working with sensitive information, trade secrets, board-level scrutiny, or big recurrent AI workloads, a private model provides compliance, security, and long-term cost savings. It’s also the best option when AI becomes a strategic differentiator rather than just a productivity tool, and when specialized knowledge or dependable data control are vital to your operations.

When Public LLMs Are Still Right

Public LLMs still work best during experimentation phases, non-sensitive use cases, or for teams with limited engineering capacity. They’re ideal for marketing content, general ideation, and low-volume tasks where cost and speed matter more than accuracy or data governance. Most enterprises eventually adopt a hybrid model, using public LLMs for everyday creativity and private LLMs for secure operations like customer support, compliance, knowledge management, contracts, and internal risk models.

The Hybrid Decision

Most enterprises use both:

- Public LLM: Marketing, brainstorming, and general knowledge.

- Private LLM: Customer support, knowledge management, contract analysis, risk assessment, compliance workflows.

Open-Source LLMs as the Foundation

Open-source models like Llama 2, Mistral, and Falcon provide organizations with a robust, cost-effective foundation for private AI development. Their main advantage is flexibility: no license limitations, complete transparency, and the ability to custom LLM deployment without vendor lock-in.

Companies usually use an open-source LLM in their private cloud LLM deployment or on-premise LLM deployment, fine-tune it with proprietary datasets, and use Retrieval-Augmented Generation (RAG) to ground responses in their internal knowledge base.

With access restrictions and audit logs placed on top, the result is a secure, business-specific LLM that operates like a proprietary model without the exorbitant cost of developing one from the start. For most enterprises, open-source LLMs provide an excellent basis for advanced, domain-specific intelligence.

Common Objections – And Honest Answers

“Isn’t this too expensive?”

Costs are upfront but predictable. At scale, private LLMs are cheaper than API-based public AI tools.

“Can’t we just use open source?”

Yes, but only if deployed privately, secured correctly, and governed properly.

“We don’t have AI expertise.”

Vendors like AIVeda offer full-stack implementation, MLOps, and ongoing support.

How to Build a Private LLM Strategy

Phase 1: Assessment (Month 1-2)

- Map current AI usage and risks.

- Identify high-impact use cases.

- Audit proprietary data for fine-tuning potential.

- Align stakeholders (security, legal, finance, business units).

Phase 2: Pilot (Month 3-6)

- Select one high-impact use case (e.g., customer support, knowledge search).

- Choose deployment: Cloud or on-prem? Vendor-managed or DIY?

- Build and measure: Track time saved, cost avoidance, quality gains.

Phase 3: Scale (Month 7-12)

- Roll out to additional use cases.

- Expand custom LLM development: Fine-tune deeper.

- Optimize infrastructure and costs.

- Establish governance: Audit trails, access controls, monitoring.

Phase 4: Embed (Year 2+)

- Integrate into core workflows (CRM, ERP, enterprise search).

- Your proprietary AI advantage strengthens over time.

- Continuous improvement and expansion.

For a detailed technical blueprint, visit our

private LLMs development complete guide

For cost modeling, check out:

build vs. buy vs. SaaS cost breakdown

Security & Vendor Considerations

What Secure LLM Deployment Really Means

True security requires:

- Encryption of data in transit & at rest

- Strict access controls

- Full audit logs

- Model guardrails

- Strong MLOps discipline

- Governance across lifecycle

How to Evaluate Private LLM Vendors

A good vendor must offer:

- Data sovereignty guarantees

- Compliance certifications

- Architectural transparency

- Long-term SLAs

- An exit strategy (no lock-in)

Leading vendors like AIVeda provide enterprise-grade private LLM deployment with a strong focus on scalability, compliance, and performance.

For secure deployment architecture and operational best practices, check out:

how to build secure private LLMs

Why AIVeda Is the Trusted Partner for Secure Private LLM Deployment

For businesses seeking to securely implement private big language models and revolutionize the way AI runs their operations, AIVeda stands out as a key partner. In contrast to generic AI vendors, AIVeda specializes in developing customized AI systems that meet enterprise governance, compliance, and data sovereignty requirements. This includes private cloud LLM deployment, on-premises LLM infrastructure, and hybrid models made for various regulatory contexts and maturity levels.

AIVeda guarantees that sensitive data never leaves your safe perimeter and that models are refined on unique business data for domain-specific insight, thanks to its extensive experience in regulated areas including BFSI, healthcare, manufacturing, and government.

Our end-to-end methodology includes discovery, infrastructure planning, secure deployment, continuous tuning, and enterprise integration, all supported by strong governance and auditability. AIVeda helps enterprises unlock AI value while keeping control over data, access, and model behavior, transforming private LLMs into a dependable competitive advantage rather than just another technology initiative.

Partner with AIVeda to simplify your private LLM journey.

Conclusion

The shift toward private LLMs marks a major moment in enterprise AI adoption. Businesses increasingly understand that while public tools offer convenience, they cannot provide the privacy, custom LLM deployment, or control needed for mission-critical operations. Private LLM deployment delivers secure, scalable, and long-term value.

By partnering with experts like AIVeda, enterprises can deploy private large language models that enhance productivity, strengthen governance, and create a durable competitive advantage. The organizations that act now will be the ones shaping the future of intelligent operations with private AI at the center.

FAQs

1.What is a private LLM exactly? What distinguishes it from a proprietary LLM?

A private LLM is a language model that you run in your own environment, such as your cloud VPC or your on-premise servers. A proprietary LLM is owned and fully controlled by a company. You use it through their API or platform, and the model itself is not open for you to host or modify. Companies such as OpenAI GPT or Anthropic Claude.

2.Can I use pre-existing models or must I create a Private LLM from scratch?

You may leverage the foundation of huge language models while saving time and resources by using pre-existing models and tailoring them to your unique requirements.

3.What distinguishes cloud-based private LLMs from on-premise ones?

Cloud-based LLMs are installed in a secure cloud environment with scalable resources and lower upfront costs, whilst on-premise LLMs are hosted locally with complete control over security.

4.What are the best applications for a Private LLM?

Private LLMs are appropriate for industries that require high security, regulatory compliance, or data isolation, such as defence, healthcare, and finance.

5.How long does it take to deploy a private LLM?

A private cloud LLM deployment can be launched in days, but an on-prem LLM deployment may take weeks to set up owing to hardware and on-prem LLM infrastructure requirements.

6.Is a private LLM secure? What prevents data breaches?

Yes, private LLMs employ encryption, access control, and isolation (VPC or air-gapping) to offer maximum security and prevent unauthorized access or data leakage.

7.Is it possible to build a private LLM totally internet-free and air-gapped?

For optimal security, on-premise LLMs can be installed in an air-gapped setting that offers total isolation from the internet.

8.Which kinds of data work well with private LLMs?

Financial records, medical records, and national security information are examples of sensitive, regulated, or private data that must be strictly secured and comply with.

9.If foundational models advance, is there a chance that my Private LLM will become out of date?

Indeed, there is a chance that your private LLM will need modifications to be competitive and perform well if foundational models get better.

10.What characteristics should I look for in a Private LLM provider (assuming I choose managed service)?

Look for companies with solid security protocols, industry experience, scalability choices, dependable support, and the ability to interact with your current infrastructure.

Also check out – How Enterprises Deploy Private LLMs Securely | AIVeda